At The Edge Of Determinism: Parallel Transaction Lifecycle in Solana Sealevel and Sui Object Runtime

Solana vs. The World

Introduction

Solana and Sui are prominent high performance layer-1 blockchains capable of handling high transaction volumes at very low costs without sacrificing on scalability, speed and decentralization. By introducing novel technologies to address the challenge of achieving scalability, security and decentralization simultaneously, both protocols have gained recognition within the industry as cutting edge blockchains that address the limitations of older blockchains such as Ethereum and Bitcoin.

At the core of these protocols is their ability to process tens of thousands of transactions in parallel resulting to very high throughput both in theoretical and practical conditions (with Solana achieving up to 65,000 TPS under theoretical conditions and up to 5,000 TPS under practical conditions, and Sui demonstrated to be able to handle up to 10,000 TPS under practical conditions), and both chains demonstrating a time to finality of under 400ms.

In this research article, we analyze the underlying mechanism behind these high transaction performance through the lens of a transaction lifecycle to provide a comprehensive and comparative analysis of how the different execution models within these two chains enable them to achieve high transaction throughput at very low finality times.

Transaction Lifecycle

Blockchains process transactions. Transactions impact state. Understanding the lifecycle of a transaction can be the clearest expression of a blockchain’s design philosophy and provides important insights to technical stakeholders on how the chain is optimized for throughput and safety, determinism guarantees, relative engineering costs as well as potential pain points that may arise under varying real-world conditions. We will in subsequent sections look at the different stages transactions go through from when it is submitted to when it is finalized and how this process impacts execution at different levels in these chains.

Solana

Solana blockchain is built on a unique design that utilizes Proof-of-Stake (PoS) as its consensus mechanism and Proof-of-History (PoH) as its time-keeping mechanism to order transactions efficiently.

The Solana design model is account-centric which is another way of saying, “everything on Solana is an account”. Accounts store state and program. Transactions contain instructions that modifies the state of accounts. Nodes participating in consensus in the network that involve processing transactions are called validators. The process through which transactions are processed in order for it to be able to modify an account can be referred to as pipelining and the points through which the transaction is at different times in its lifecycle will be referred to as stages.

A Solana Transaction

On Solana, a transaction is a set of signatures of serialized messages signed by the first key of the Message’s account key.

The message in a transaction is a structure containing a header, account keys, recent blockhash and instructions. The header contains the MessageHeader which describes the organization of the Message’s account keys.

Every instruction specifies which accounts it may reference, or otherwise requires specific permissions of. Those specifications are: whether the account is read-only, or read-write; and whether the account must have signed the transaction containing the instruction.

pub struct Message {

pub header: MessageHeader,

pub account_keys: Vec<Pubkey>,

pub recent_blockhash: Hash,

pub instructions: Vec<CompiledInstruction>,

}Individual Instructions contain a list of all accounts they may access, along with their required permissions.A Message contains a single shared flat list of all accounts required by all instructions in a transaction. When building a Message, this flat list is created and instructions are converted to a set of CompiledInstructions. Those CompiledInstructions are then referenced by index the accounts they require in the single shared account list.

The shared account list is ordered by the permissions required of the accounts:

accounts that are writable and signers

accounts that are read-only and signers

accounts that are writable and not signers

accounts that are read-only and not signers

Given this ordering, the fields of MessageHeader describe which accounts in a transaction require which permissions.

pub struct MessageHeader {

pub num_required_signatures: u8,

pub num_readonly_signed_accounts: u8,

pub num_readonly_unsigned_accounts: u8,

}When multiple transactions access the same read-only accounts, the runtime may process them in parallel in a single PoH entry. Transactions that access the same read-write accounts are processed sequentially.

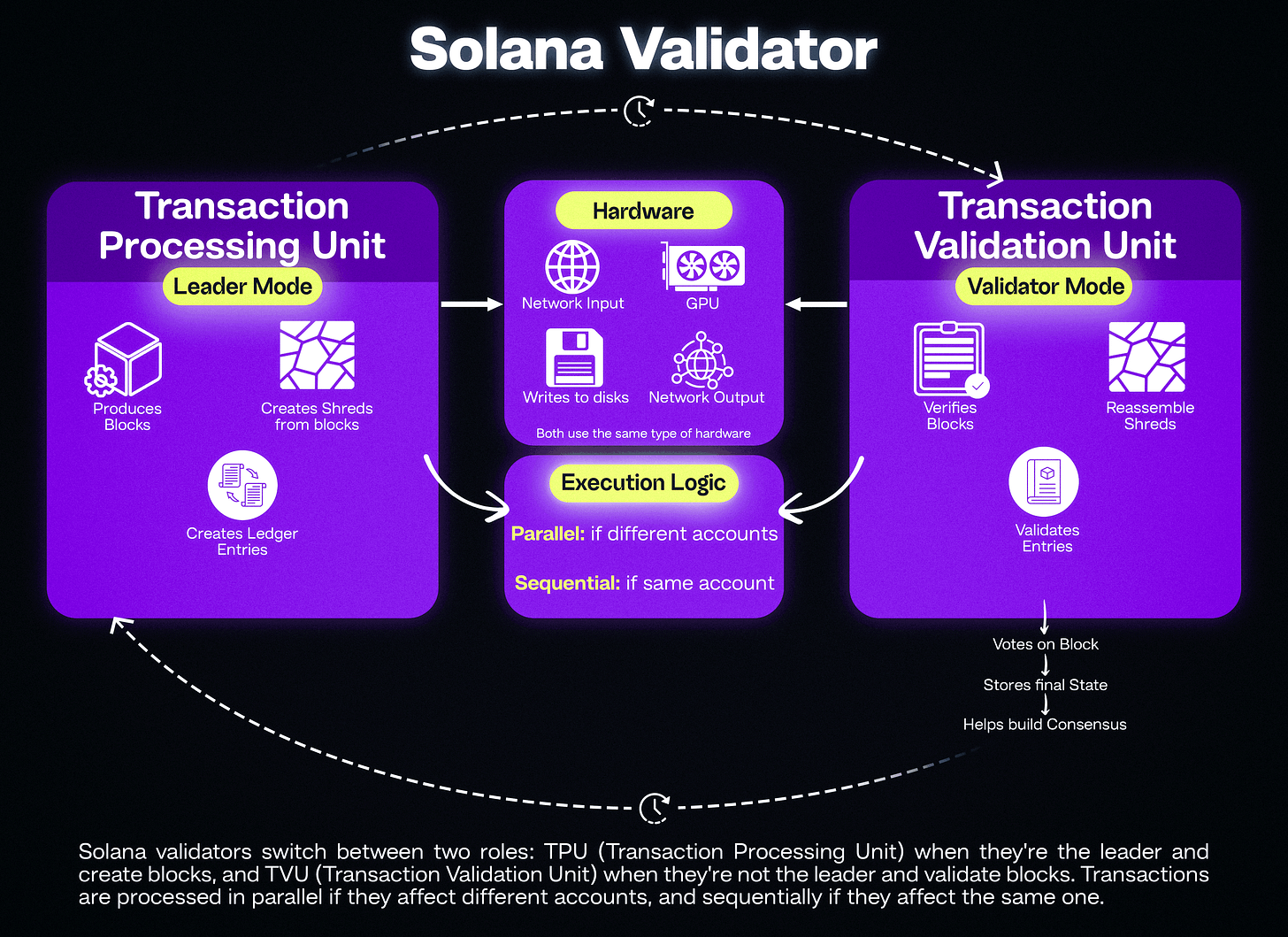

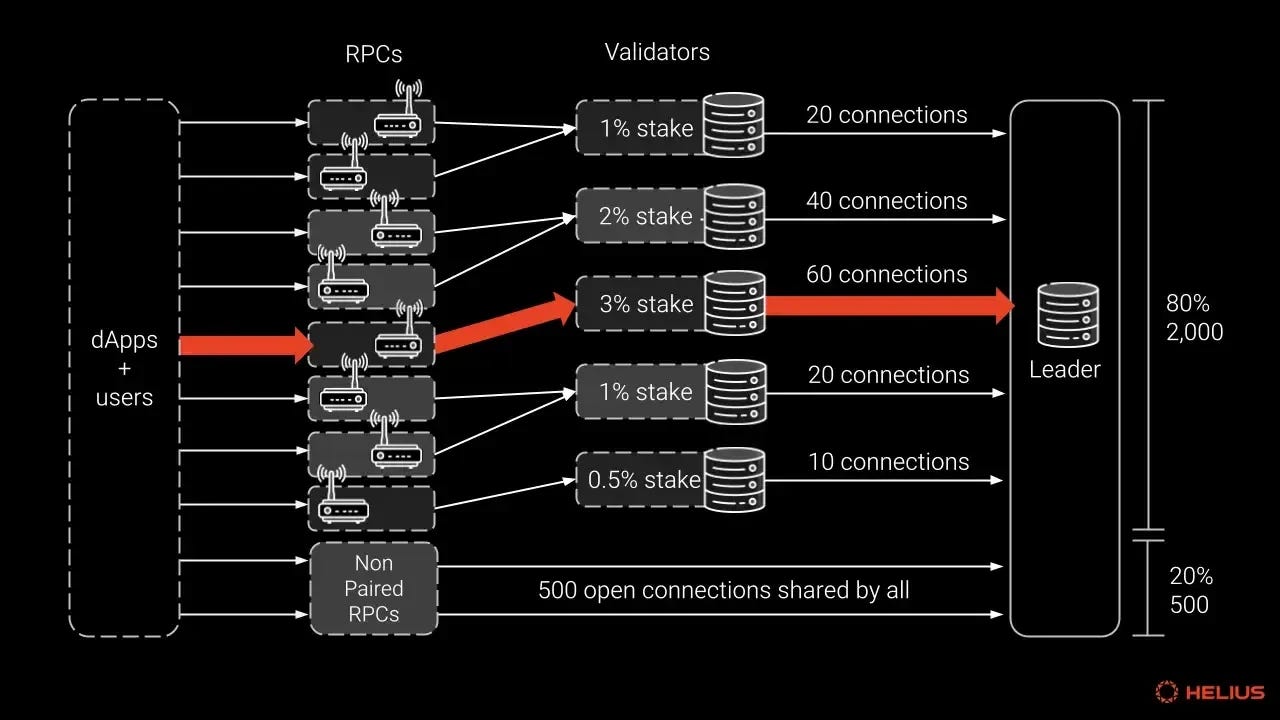

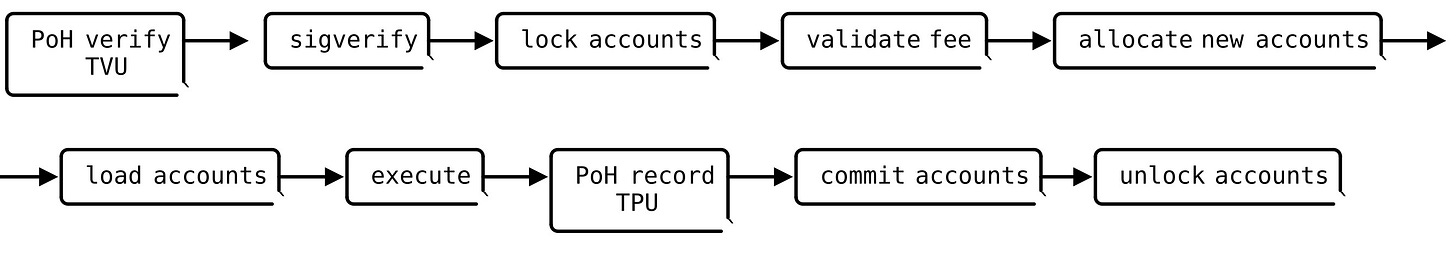

Transactions are submitted to the client, and is “picked” through the gulf stream and processed through two multi-stage distinct pipelined processes in the validator called the Transaction Processing Unit (TPU) and the Transaction Validation unit (TVU). These processes work together with the runtime to ensure transactions that modify different account states are processed in parallel while transactions that modify the same account state are processed sequentially.

The TPU runs when the validator is in leader mode (producing blocks) and the TVU runs when the validator is in validator mode (validating blocks). In both cases, the hardware being “pipelined” is actually similar: the network input, GPU cards, writes to disks and network output e.t.c. however what it does with that hardware is different. Succinctly put, the TPU is used to create ledger entries while the TVU exists to validate entries.

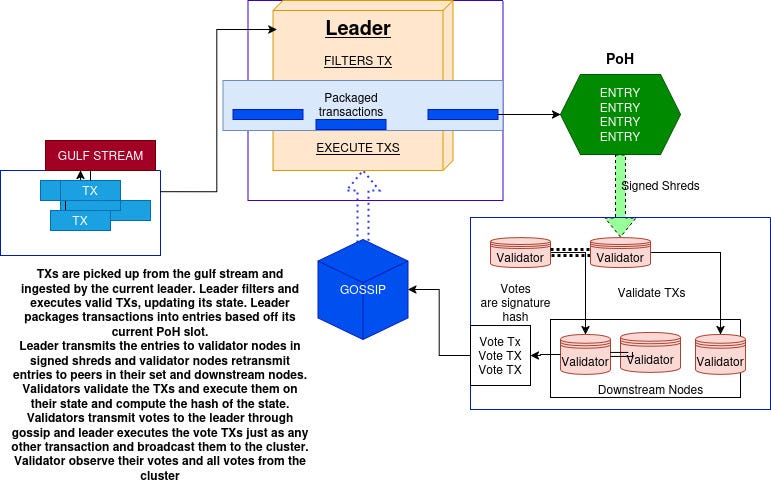

From a high-level overview, transactions are submitted via a client and processed through the gulf stream via QUIC to a leader’s TPU where it is forwarded in batches, undergoes a couple of verification checks, and is then scheduled by the banking stage and forwarded to the Sealevel for execution. State updates are written back into the bank’s in-memory state and validators vote on blocks through gossip and blocks are finalised using Tower BFT which is a PBFT variant with stake-weighted lockouts mechanisms.

Gulf Stream

In most blockchains, transactions submitted by users are “queued” in a memory pool called mempool, waiting to be processed by the network. And it is possible for signed transactions to remain in the mempool for a long time, and even indefinitely if the network is not in optimal state or execution conditions are not met resulting in these transactions never being included in any block.

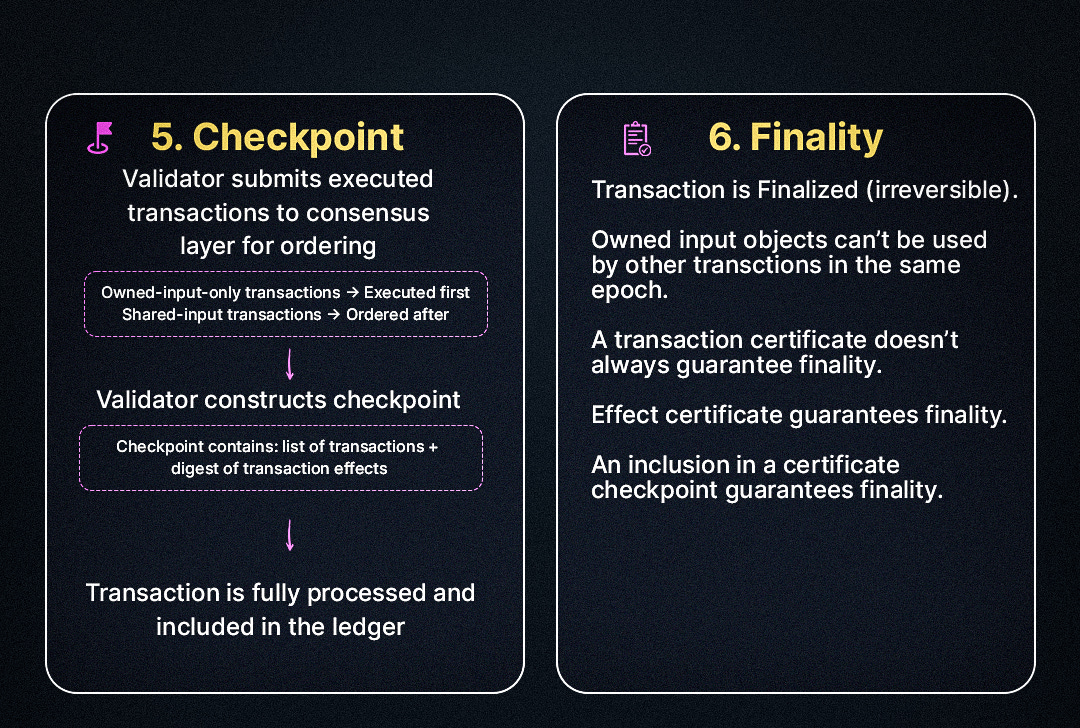

Solana eliminates the need for a global mempool by relying on a deterministic leader schedule influenced by a stake-weighted algorithm known as Stake-Weighted Quality of Service (SWQoS) to prioritize transaction messages that are routed through staked validators. Since the leader schedule is known by all active nodes ahead of time, transaction messages can be distributed without any chatter overhead, therefore ensuring upcoming leaders already have transactions to process before they are scheduled to produce a block. This mechanism allows validators to execute transactions ahead of time, reduce confirmation periods and reduce memory pressure as a result of unconfirmed transactions on validators. Therefore under high load, validators can execute transactions ahead of time and drop failed transactions.

Another advantage of the gulf stream is, compared to traditional chains that use a mempool where leaders (block producers) also have to re-transmit the same transactions in a block, which means that every transaction is propagated at least twice through the network, Solana does not need to overload gossip to synchronize pending transactions and transactions do not need to compete for block space via gas auctions but are distributed based on scheduling.

TPU

The TPU is the pipeline of the validator that is responsible for block production. Transactions are fetched from the client and forwarded in data packets through a component called the quic streamer which allocates the packet memory and reads the data from the QUIC endpoint (this is known as the “Fetch Stage”). Each stream is used to transmit a packet within a QUIC transmission constraint identified by the client (IP address, Node pubkey) and the server.

The packets are transmitted to another stage in the pipeline called Sigverify Stage. In this stage, packets are evaluated for duplication and are deduplicated with a special load-shedding mechanism applied to remove excessive packets. The deduplicated packets are now filtered to remove packets with invalid signatures and forwarded to the banking stage.

The banking stage is a core part of the runtime execution where packets are scheduled, undergoes further filtration for conflicts, and is determined suitable to be held or processed and forwarded in batches. If it detects that the node is the block producer, it processes the held packets and newly received packets with the Bank component at the tip slot(A slot is the unit of time given to a leader for encoding a block). The bank component is an in-memory representation of the full state of the ledger at a given slot.

Within the banking stage and the scheduler component, the transaction is tracked in two state:

An unprocessed state where the transaction is available for scheduling

A pending state where the transaction is currently being scheduled or being processed.

When a transaction finishes processing it may be retryable. If it is retryable, the transaction is transitioned back to the unprocessed state and If it is not retryable, the state should be dropped. The valid processed transactions are formed into an “Entry” via PoH ticks, bundled into blocks and broadcasted in shreds to network peers through turbine (the protocol data dissemination engine) which generates erasure codes for “regenerating” lost data packets before transmitting the packets to the appropriate network peer. This stage is called the Broadcast Stage.

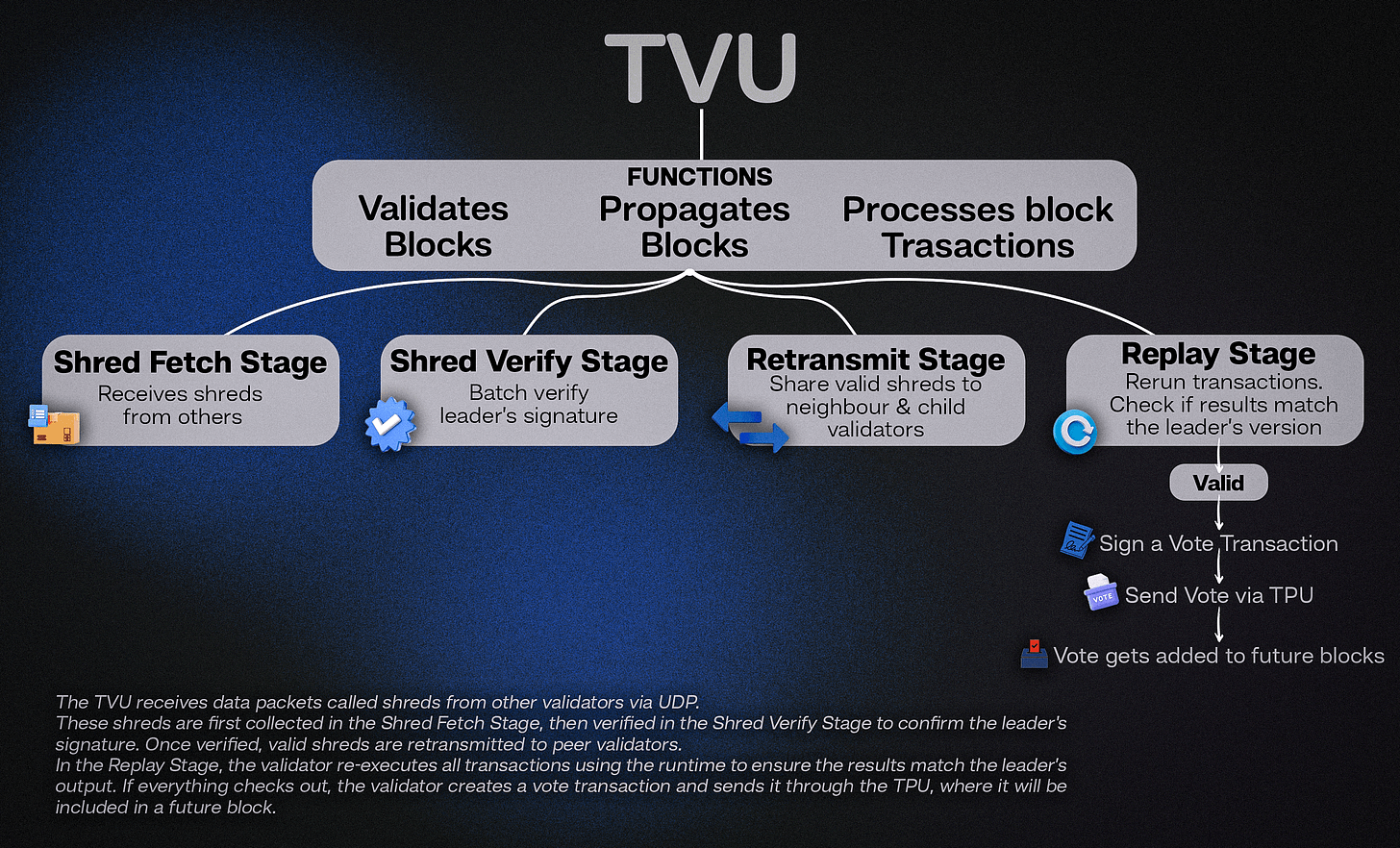

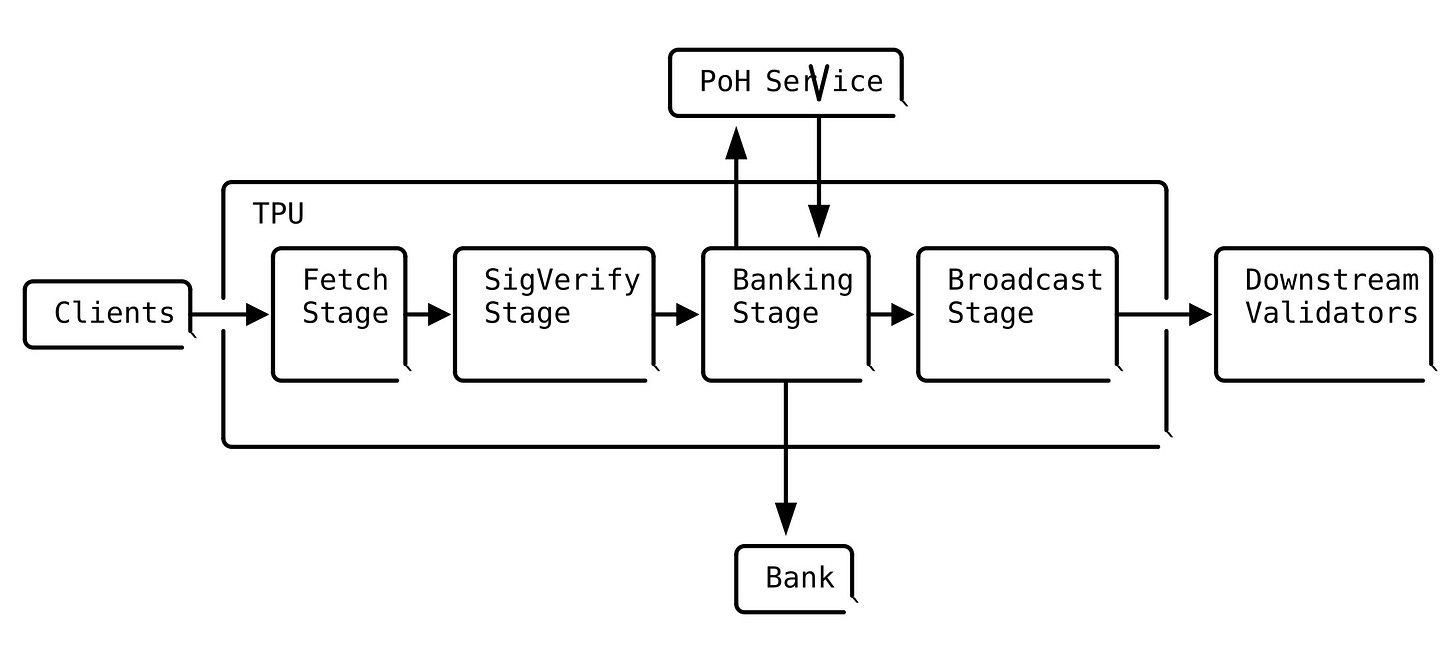

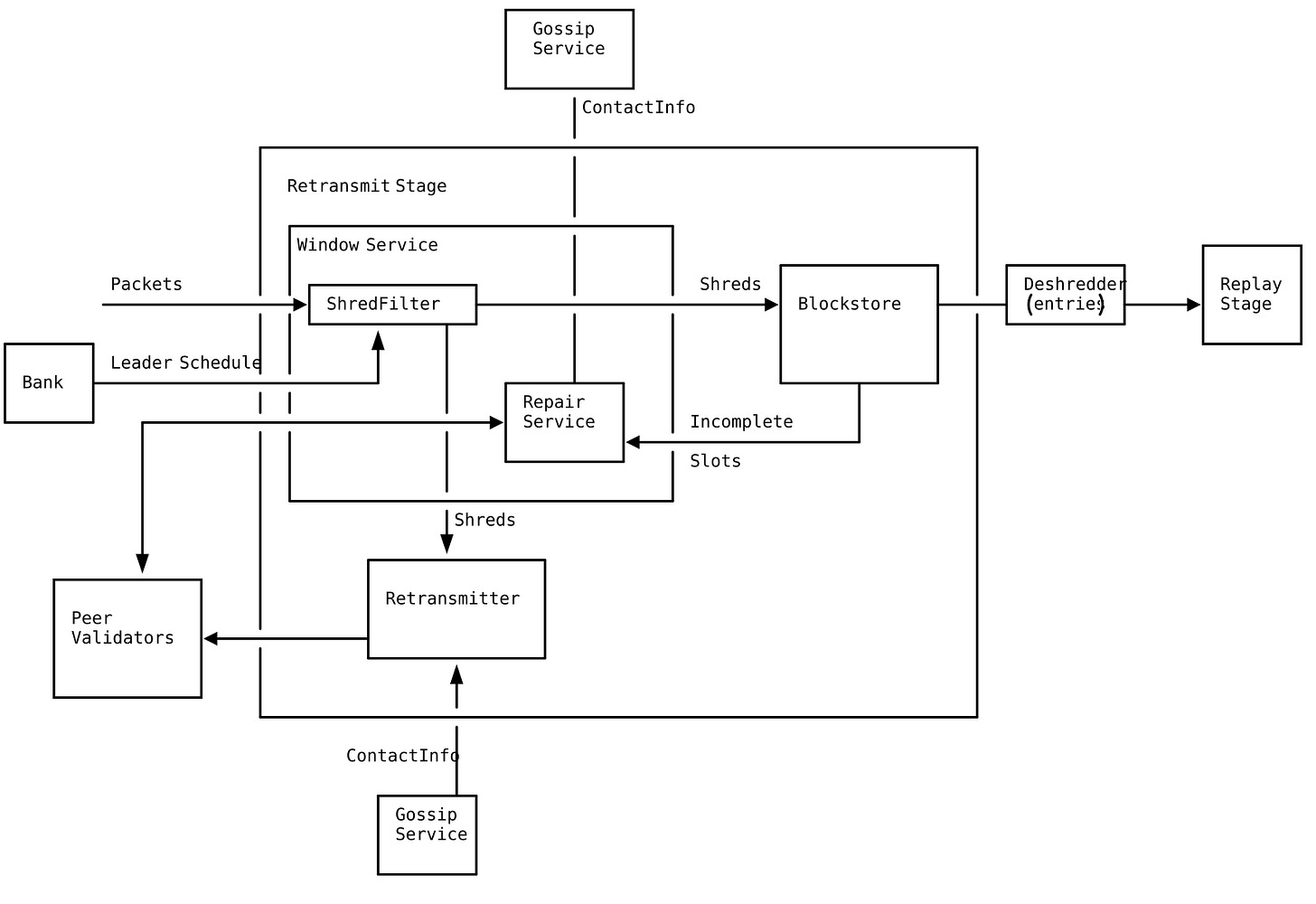

TVU

The TVU is the logic of the validator that is responsible for validating, propagating blocks and processing those blocks’ transactions through the runtime. As mentioned earlier, it operates on non-leader validator nodes mainly to reconstruct and validate blocks. In the TVU, data packets are also processed in multi-threaded stages before finalized. It includes pipelined stages such as the retransmit stage, shred fetch stage, signature verification and replay stage.

In the Shred Fetch Stage and the Shred Verify Leader signature stage, non-leaders receive the blocks (shreds) from others via UDP and perform batch signature verification on shreds. Valid shreds are retransmitted to peers, i.e., neighbourhood validators and child validators (Retransmit Stage) and each transaction is replayed in order on a bank in the Replay Stage.

In the replay stage, the runtime is invoked again to deterministically re-execute all transactions to ensure that all state changes, program attributes and transaction logs match exactly with the leader output.

If a block is evaluated to be valid, the validator signs a vote transaction (different from regular transactions) and sends the vote to its leader via the TPU and votes are included in subsequent blocks.

Runtime

The runtime is Solana’s concurrent transaction processor shared between the TPU and the TVU. Transactions specify their data dependencies upfront to enable explicit dynamic memory execution and as a result, state can be well alienated from program code giving the runtime the ability to choreograph concurrent access. The Solana runtime uses a special execution engine called Sealevel and a SIMD (Single Instruction Multiple Data) process to ensure that transactions accessing only read-only accounts are executed in parallel while transactions accessing writable accounts are serialized. Within the runtime, transactions are executed atomically, i.e., all the instructions in a transaction must be executed successfully for that transaction to be committed to the bank, else the operation aborts.

The runtime interacts with the program through an entrypoint with a well-defined interface. Its execution engine maps public keys to accounts and routes them to this entrypoint. However, it enforces some critical constraints to guides its execution logic, defined by the virtual machine instruction set architecture:

Only the owner program may modify the contents of an account. This means that upon assignment, the data vector is guaranteed to be zero.

Total balances on all the accounts are equal before and after execution of a transaction.

After the transaction is executed, balances of read-only accounts must be equal to the balances before the transaction.

All instructions in the transaction are executed atomically. If one fails, all account modifications are discarded.

The TPU and TVU pipelines follow a slightly different pathway when interacting with the runtime. The TPU runtime ensures that “entries” are recorded (via PoH ticks) before memory is committed while the TVU runtime ensures that “entries” are verified before the runtime processes any transactions.

Consensus

Consensus is one of the most important and fundamental mechanisms in complex distributed computing systems. In a Solana’s transaction lifecycle, consensus comes after the block is executed and validated by the TVU but before it is finalized and committed. At the core idea of consensus is a uniform agreement and integrity property that ensures every participant in the network decides on the same outcome, and once decided, cannot change their decision. In a formal sense, a fault-tolerant consensus mechanism must satisfy the following properties:

Uniform agreement: No two nodes decide differently

Integrity: No two nodes decides twice

Validity: If a node decides a value y, then y was proposed by some other node

Termination: Every node that does not crash eventually decides some value

From a surface point of view, the goal of consensus is simply to get nodes to agree on something. In Solana, there are a number of situations which it is important for nodes to agree. This is predominant in three scenarios:

Leader rotation

All the nodes need to agree which node is the leader because the leadership position might become contested if some nodes cannot communicate with others due to a potential network fault which might result in two nodes believed to be the leader— This is also known as split brain situation. The leader schedule is generated using a predefined seed with the following algorithm: the PoH tick height (a monotonically increasing counter) is periodically used to seed a stable pseudo-random algorithm. At that height, the bank is sampled for all the staked accounts with leader identities that have voted within a cluster-configured number of ticks. The sample is called the active set which is sorted by stake weight (SWQoS). The random seed is then used to select nodes weighted by stake to create a stake-weighted ordering and this ordering becomes valid after a cluster-configured number of ticks.

Synchronization

Without reliable timestamps, a validator cannot determine the order of incoming blocks. Solana uses a mechanism called Proof-of-History as its cryptographic clock to order transactions before they undergo consensus. According to Anza documentation on synchronization,

“Leader nodes "timestamp" blocks with cryptographic proofs that some duration of time has passed since the last proof. All data hashed into the proof most certainly have occurred before the proof was generated. The node then shares the new block with validator nodes, which are able to verify those proofs. The blocks can arrive at validators in any order or even could be replayed years later. With such reliable synchronization guarantees, Solana is able to break blocks into smaller batches of transactions called entries. Entries are then streamed to validators in real time, before any notion of block consensus”.

It is important to keep in mind that while Proof-of-History is not a consensus mechanism, it hugely impacts the performance of Solana’s Proof-of-Stake consensus.

Atomic commit

Another important process that nodes need to agree on is atomic commits. In a high performance system like Solana capable of processing tens of thousands of transactions, it is possible that a transaction may fail on some nodes and succeed on others. In order to avoid partial execution, Solana ensures atomicity within the runtime, in the sense of ACID, and also ensures that all nodes agree on the outcome of a transaction: either they rollback (if anything goes wrong) or they commit (if nothing goes wrong).

Commitment in Solana measures the finality of a block (slot) based on how many validators have voted for it, as well as how deep their votes are in the Tower BFT lockout mechanism. It reflects how strongly the network has agreed on the given slot based on the votes cast by validators: each validator votes on slots (block heights in particular) and commits to not vote on forks that go backward in time (this mechanism is enforced by lockouts in Tower BFT). There are three commitment statuses in Solana: processed, confirmed, finalized. A block is said to be confirmed when a supermajority of staked validators (66%+) votes on it and it is finalized when 31+ confirmed blocks are built atop of it.

As a direct implication of its optimistic parallel execution and asynchronous leader schedule, Solana follows a “execute first, vote later” model: the protocol does not wait for all validators to agree on a newly produced block before the next block is produced. This may also lead to forks (a scenario where two or more competing chains of slots exist simultaneously, mostly caused as a result of loss of connection to a leader). Once a slot becomes finalized, all competing forks are abandoned and that fork becomes the canonical chain.

Sui

Unlike Solana that uses an account-centric model, Sui blockchain utilizes an object-oriented data model where state data are represented as objects with unique identifiers, properties and methods.

In Sui, a smart contract is also an object called a Sui Move package with a unique identifier that manipulates objects. These Sui Move packages are made up of a set of Sui Move bytecode modules. Every module is unique by its name and the combination of a package’s on-chain ID and the name of a module that uniquely identifies the module.

While the intricacies of object-design and metadata is beyond the scope of this article, it is important to keep in mind that every object has an owner that dictates how that object can be used in transactions. Objects can have the following ownership models:

Address-Owned Objects: An address-owned object is owned by a specific 32-byte address that is either an account address or an object ID. it is accessible only to its owner.

Immutable Objects: An immutable object cannot be mutated, transferred or deleted. They have no owner and are globally accessible to used by anyone

Shared Objects: A shared object is an object that is shared and is also accessible to everyone.

Wrapped Objects: This involves wrapping an object inside another object. Wrapped objects have no independence and can only be accessed through the wrapping object

A Sui Transaction

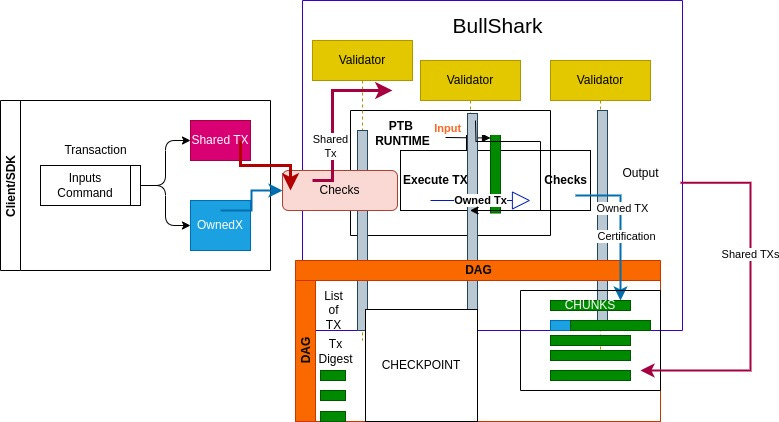

On Sui, transactions are composed of a group of commands that execute on inputs to define the result of the transaction. These group of commands are called programmable transaction blocks (PTB) and they define all user transactions on Sui. PTBs allow a user to call multiple Move functions, manage their objects, and manage their “coins” in a single transaction--without having to publish a new Move package. The structure of a PTB is defined as:

{

inputs: [Input],

commands: [Command],

}Where inputs value is a vector of arguments that are either objects or pure values. These objects can be owned by the send or can be shared/immutable objects. The commands field is also a vector but of commands. commands are simply mini bytecode-like sequence of high level steps analogous to instructions. During execution of PTBs, the input vector is populated by the input objects or pure value bytes. The transaction commands are then executed in order, and the results are stored in a result vector. Finally, the effects of the transaction are applied atomically. While we will not discuss the internals of the Sui PTBs as that is another intricate topic, one thing to note is that at the beginning of execution, the PTB runtime takes the already loaded input objects and loads them into the input array. The objects are already verified by the network, checking rules like existence and valid ownership. The pure value bytes are also loaded into the array but not validated until usage. At this stage is the effects on the gas coin is of utmost importance. Here the maximum gas budget is withdrawn from the gas coin. Any unused gas is returned to the gas coin at the end of execution, even if the coin has changed owners. Each transaction command is then executed in order.

(Note: Sui also has another kind of transaction called Sponsored Transactions. These take the same structure as PTBs but in this case, a Sui address known as the sponsor pays the gas fees for a transaction that another address initializes).

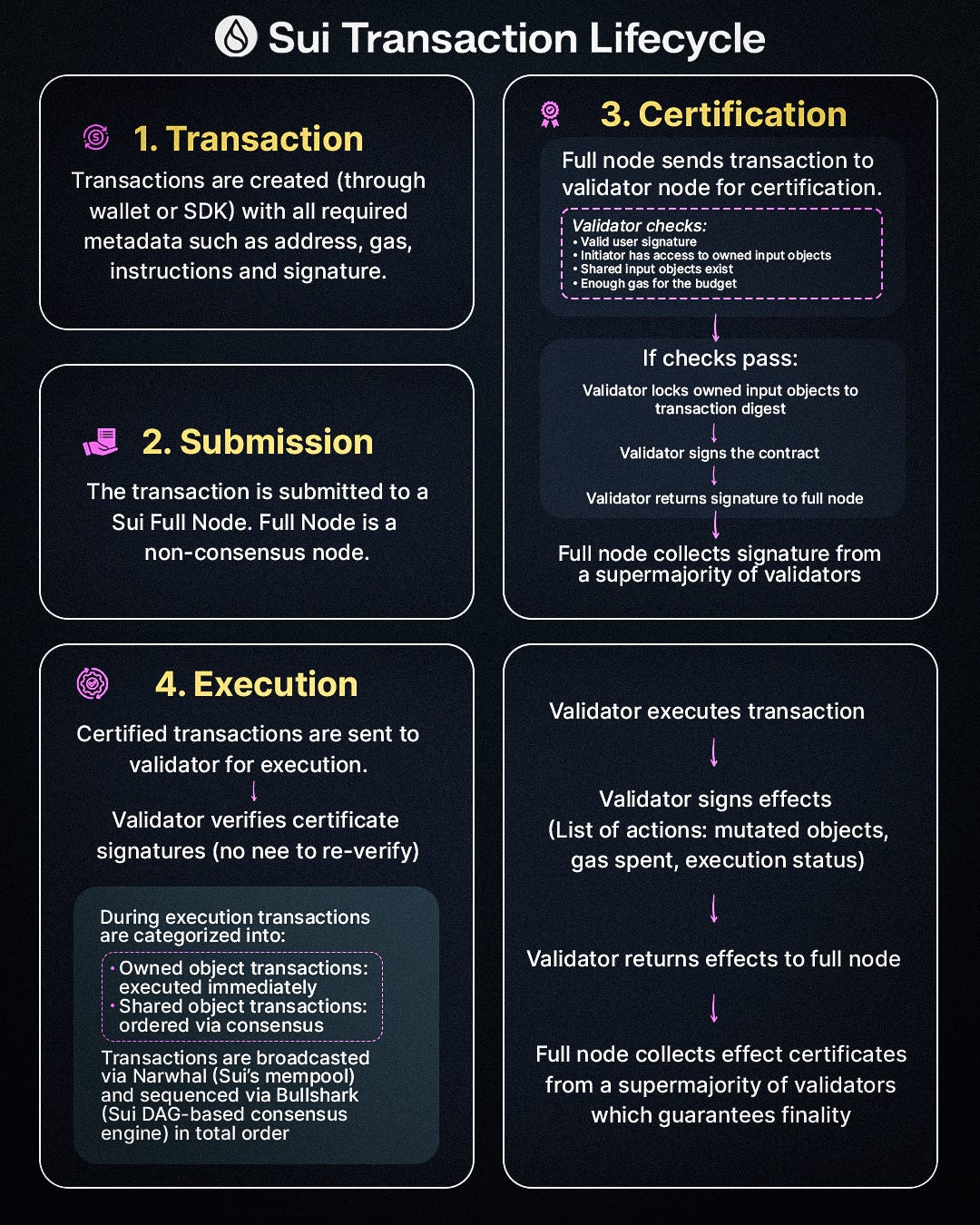

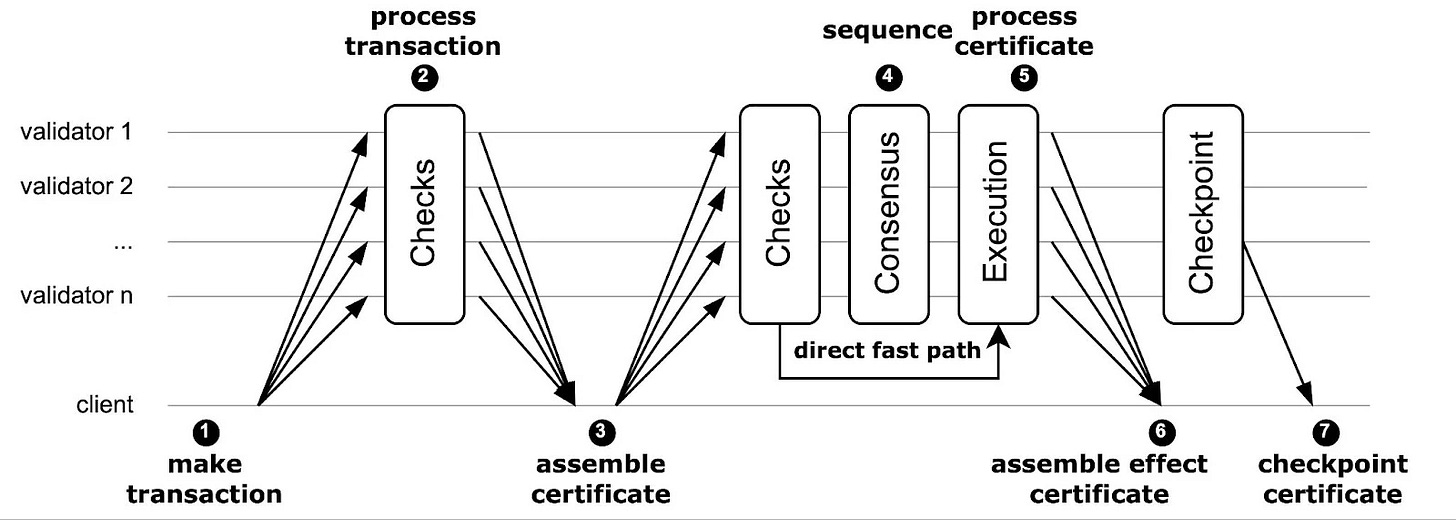

After submission, the Full node commences the process of certifying all provided metadata by sending the transaction to a validator node (Certification). The validator node performs all necessary validity checks on the transaction and signs it if it passes those checks, for it to be considered valid. For the transaction to be considered a valid transaction by a validator node, it must:

Have a valid user signature

Ensure the initiator of the transaction must have access to all the owned input objects the transaction uses.

Ensure the shared input objects used by the transaction exist.

Contains at least as much gas as specified in the transaction's gas budget.

If all the checks pass, the validator attempts to lock all the owned inputs objects to the given “transaction digest” to ensure that each owned input can only be used at a time. If the locking process is successful, the validator signs the transaction and returns the signature back to the Full node. The Full node does not just collect a single validator signature but as many validator signatures as possible in parallel to form a supermajority.

Execution and Checkpoints

Once transactions have been issued a certificate, they are sent to the validator for execution. The validator doesn’t need to re-verify the transactions: all it has to do is verify the signatures on the certificate. If the signature on the certificate is valid, the the validator can be sure that the transaction is valid.

During execution, transactions are categorized into two categories:

Owned object transactions: do not access any shared input object and are executed immediately. These transaction do not pass through consensus. They are executed after validation and committed to the canonical chain.

Shared object transaction: does access shared object and therefore requires ordering through consensus with respect to other transactions using the same shared objects and executing it.

Afterwards, transactions are broadcasted via Narwhal (Sui’s Mempool), and sequenced via Bullshark (Sui DAG-based consensus engine) which sequences the transactions in a total order.

Note that Sui’s mempool Narwhal avoids the typical mempool congestion because it decouples transaction dissemination from ordering and does not order transactions by itself but instead hold certified, signed transactions and organizes them in a DAG. Also as a result of Sui’s object-centric ownership model, most transactions are independent and do not need to compete for a global ordering slot.

After the transactions execute, the validator signs the effects of the transaction and returns them to the Full node. Transaction effects are essentially a list of all of the actions that the transaction took such as all objects that were mutated, the gas that was spent, and the execution status of the transaction. The effects signatures constitutes a collection of effect certificate collected by the Full node is from a supermajority of validators and guarantees that a transaction was finalized.

After this, a transaction moves on to being included in a checkpoint which indicates its final stage in its life cycle. As validators execute transactions, they are submitted to consensus layer for ordering where owned-input-only-objects transactions are executed first compared to shared input objects. Checkpoints are created when transactions are ordered and must be complete.

Chunks of transactions are taken by the validator from the consensus layer and construct a designated checkpoint (a checkpoint contains a list of transactions as well the digest of the transaction effect for each transaction). At this point the transaction is fully processed and is then included in the ledger.

Finality

This is the point at which an executed transaction is finalized and becomes irreversible. At this point of the transaction lifecycle, the transaction is irrevocable and other subsequent transaction using the same owned input objects during the same epoch are considered invalid.

It is crucial to note that a transaction certificate does not always guarantee finality even though there is a high likelihood of it being finalized while effect certificates guarantee finality because it requires a supermajority of validators to execute the transaction and commit to the effects. An inclusion in a certified checkpoint also guarantees finality.

Insights into Execution, Scalability and Design-Tradeoffs

As stated earlier, understanding the lifecycle of a transaction can be the clearest expression of a blockchain’s design philosophy. The differences in the transaction lifecycle between Solana and Sui exposes deep execution model philosophies across three critical vectors: scalability thresholds, execution efficiency and design trade-offs.

Execution

Solana as an account-centric chain detects account conflict dynamically through account locking during the Banking Stage, ensuring transactions that do not modify the same account are processed in parallel while conflicting transactions are processed sequentially. Despite the fact that this sort of account detection mechanism can introduce extra runtime costs in heavy DeFi situations especially where programs need to execute other programs (a mechanism called Cross-Program Invocation), the Solana execution engine is still able to achieve massive parallelism as a result of its dynamic conflict detection thereby giving the chain ability to execute transactions almost instantaneously at incredibly low fees.

Sui, on the other hand do not need to worry about conflicting transactions as its parallelism is inferred at compile time due to its object-centric ownership model. Shared object and owned objects model makes it possible to statically infer conflicts and achieve nearly zero runtime cost for owned-objects transactions.

Scalability Thresholds

In terms of scaling, Sui is more horizontally scalable and can scale linearly with object partitions as owned-objects transactions do not need to be ordered and are unbounded by consensus. Shared-object transactions on the other hand, is limited by BullShark (Sui consensus layer) as these need to be ordered and approved by validators on the network. This can be scaled very powerfully, as over 80% of transactions on Sui are owned only, and do not need to be ordered nor need consensus, therefore capable of reaching finality in less than a second. The minority of transactions that do need consensus still manages to scale well due to DAG.

Solana, by virtue of its single-leader-at-a-time approach, and execution fanout which is limited by account contention does appear to hit some sort of vertical scaling threshold but aggressively optimizes within this threshold via its well defined pipelining and complemented with PoH, transactions can be ordered accurately, processed in less 400ms, and achieve finality in less than a second.

Design Trade-offs

Solana achieves determinism guarantees via strict runtime constraints, account isolation and its deterministic leader scheduling. As a result of its dynamic account contention resolution and well-defined pipelining, it favors deep inter-program interaction thereby enabling it achieve strong composability.

Sui also achieves determinism guarantees through built-in execution paths determined by object ownership analysis but has very weak composability because its object ownership model isolates transactions.

Summary

Underlying the incredible high performance of Solana and Sui is the mechanism through which transactions are processed from submission to finality. Through the lens of the transaction life cycle, we can gain insight into the carefully engineered pipelines that ensure low latency and high throughput for both chains.

Solana is designed on an account-centric model, and transactions undergo a series of multi-threaded processes and pipelines to ensure their validity. Transactions are submitted to the leader which runs the TPU pipeline to ensure transactions are verified and scheduled accordingly; parallel if they do not conflict and sequential if they do. When a validator is not producing blocks, it runs the TVU pipeline for replaying transactions and validating them. Both the TPU and TVU use the runtime. As a result, Solana processes thousands of transactions in very short periods because the runtime can infer upfront and deterministically, non-overlapping accounts and execute transactions that do not conflict in parallel making Solana very ideal for real world cases like high frequency trading, deep composable DeFi, infrastructure heavy dApps and low-latency consumer dApps.

Sui on the other hand uses an object-centric model where each object is tracked by a unique identifier. By inferring object ownership, it can determine statically whether a transaction can be executed in parallel if it touches disjoint sets of objects. Through its dual execution path model (separating transactions into owned-objects transactions and shared-object transactions and using signed checkpoints to keep Full nodes in sync), it can process lightweight transactions without any burden on the consensus layer as well as achieve reliable fast finality and synchronization for new nodes. This enables it to scale horizontally with increasing load and is very useful for applications with massive object interaction like gaming, asset-centric exchanges and heavy composable programmable assets.

References

David J DeWitt and Jim N Gray: “Parallel database systems: The future of high performance database systems,” Communications of the ACM, volume 35, number 6, pages 85–98, June 1992. doi:10.1145/129888.129894

https://www.anza.xyz

https://docs.sui.io

https://www.helius.dev/blog/solana-gulf-stream

https://medium.com/solana-labs/gulf-stream-solanas-mempool-less-transaction-forwarding-protocol-d342e72186ad

https://medium.com/solana-labs/sealevel-parallel-processing-thousands-of-smart-contracts-d814b378192

https://solana.com/docs/

https://dev.to/aseneca/cross-program-invocations-and-pdas-the-combination-of-two-powerful-mechanism-on-anchor-4b93

https://arxiv.org/pdf/2105.11827

https://trustwallet.com/blog/cryptocurrency/sui-vs-solana

https://medium.com/solana-labs/tower-bft-solanas-high-performance-implementation-of-pbft-464725911e79

Jim N Gray and Leslie Lamport: “Consensus on Transaction Commit,” ACM Transactions on Database Systems (TODS), volume 31, number 1, pages 133–160, March 2006. doi:10.1145/1132863.1132867

Leslie Lamport: “Time, Clocks, and the Ordering of Events in a Distributed System,” Communications of the ACM, volume 21, number 7, pages 558–565, July 1978.

Michael J Fischer, Nancy Lynch, and Michael S Paterson: “Impossibility of Distributed Consensus with One Faulty Process,” Journal of the ACM, volume 32, number 2, pages 374–382, April 1985. doi:10.1145/3149.214121